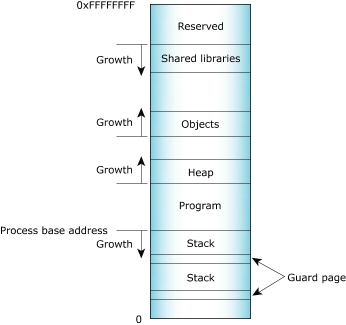

- program

- stack

- shared library

- object

- heap

Process memory layout on an x86.

The Memory Information and Malloc Information views of the QNX System Information perspective provide detailed, live views of a process's memory. For more information, see the Getting System Information chapter.

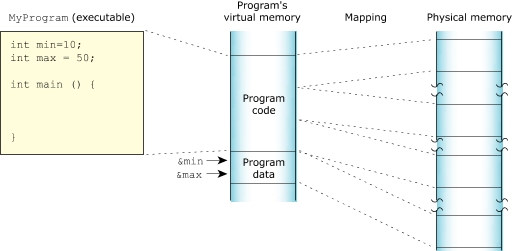

Program memory

Program memory holds the executable contents of your program. The code section contains the read-only execution instructions (i.e. your actual compiled code); the data section contains all the values of the global and static variables used during your program's lifetime:

The program memory.

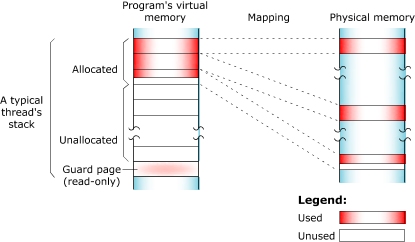

Stack memory

Stack memory holds the local variables and parameters your program's functions use. Each process in Neutrino contains at least the main thread; each of the process's threads has an associated stack. When the program creates a new thread, the program can either allocate the stack and pass it into the thread-creation call, or let the system allocate a default stack size and address:

The stack memory.

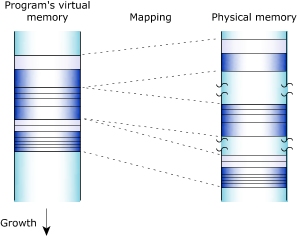

When your program runs, the process manager reserves the full stack in virtual memory, but not in physical memory. Instead, the process manager requests additional blocks of physical memory only when your program actually needs more stack memory. As one function calls another, the state of the calling function is pushed onto the stack. When the function returns, the local variables and parameters are popped off the stack.

The used portion of the stack holds your thread's state information and takes up physical memory. The unused portion of the stack is initially allocated in virtual address space, but not physical memory:

Stack memory: virtual and physical.

At the end of each virtual stack is a guard page that the microkernel uses to detect stack overflows. If your program writes to an address within the guard page, the microkernel detects the error and sends the process a SIGSEGV signal.

As with other types of memory, the stack memory appears to be contiguous in virtual process memory, but isn't necessarily so in physical memory.

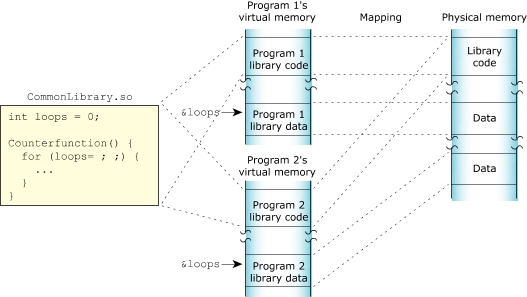

Shared-library memory

Shared-library memory stores the libraries you require for your process. Like program memory, library memory consists of both code and data sections. In the case of shared libraries, all the processes map to the same physical location for the code section and to unique locations for the data section:

The shared library memory.

Object memory

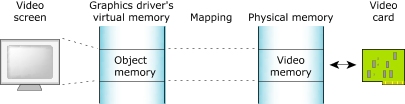

Object memory represents the areas that map into a program's virtual memory space, but this memory may be associated with a physical device. For example, the graphics driver may map the video card's memory to an area of the program's address space:

The object memory.

Heap memory

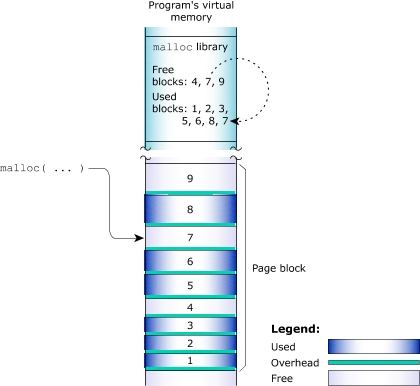

Heap memory represents the dynamic memory used by programs at runtime. Typically, processes allocate this memory using the malloc, realloc, and free functions. These calls ultimately rely on the mmap function to reserve memory that the malloc library distributes.

The process manager usually allocates memory in 4 KB blocks, but allocations are typically much smaller. Since it would be wasteful to use 4 KB of physical memory when your program wants only 17 bytes, the malloc library manages the heap. The library dispenses the paged memory in smaller chunks and keeps track of the allocated and unused portions of the page:

The heap memory

Each allocation uses a small amount of fixed overhead to store internal data structures. Since there's a fixed overhead with respect to block size, the ratio of allocator overhead to data payload is larger for smaller allocation requests.

When your program uses the malloc function to request a block of memory, the malloc library returns the address of an appropriately sized block. To maintain constant-time allocations, the malloc library may break some memory into fixed blocks. For example, the library may return a 20-byte block to fulfill a request for 17 bytes, a 1088-byte block for a 1088-byte request, and so on.

When the malloc library receives an allocation request that it can't meet with its existing heap, the library requests additional physical memory from the process manager. These allocations are done in chunks called arenas. By default, the arena allocations are performed in 32 KB chunks. The value must be a multiple of 4 KB, and currently is limited to less than 256 KB. When memory is freed, the library merges adjacent free blocks within arenas and may, when appropriate, release an arena back to the system.

For detailed information about arenas, see Dynamic memory management in the QNX Neutrino System Architecture guide.

How virtual memory is mapped to physical memory.

For more information about the heap, see Dynamic memory management in the QNX Neutrino System Architecture guide.